Project to move my blog from private VM with Cloudflare VPN to Google Drive and Cloudflare R2 storage

- 1. Prepare my blog to deploy to web-worker.

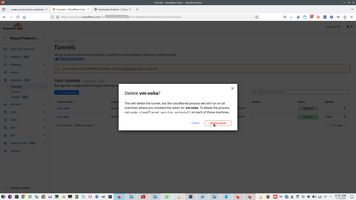

- 2. Delete ZeroTrust VPN, ZeroTrust DNS and assign Cloudflare web-worker to domain.

- 3. Upload my blog to Google drive and share Google drive.

- 4. Make local PostreSQL DB with description of file in Google Drive.

- 5. Upload project DB to Supabase and set up RLS policy.

- 6. Make core of this project - CTE function in Supabase.

- 7. Make Clouflare worker.

- 8. Make cache in Cloudflare R2.

- 9. Reduce worker size.

- 10. Plugin to my CMS what simplify adding new page.

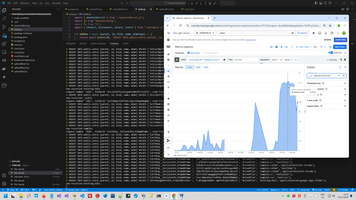

- 11. Workable project statistics.

My blog already working fine, but once I decide move my blog to Cloud and make my blog free from various paid (except spending money to domain registration).

This was a multi-steps project:

1. Prepare my blog to deploy to web-worker.

First step was a replace all web-server tag server to Javascript, I made this step with that project Node project for support this blog.

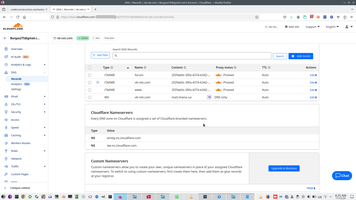

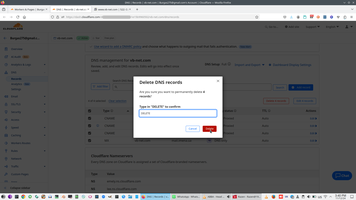

2. Delete ZeroTrust VPN, ZeroTrust DNS and assign Cloudflare web-worker to domain.

3. Upload my blog to Google drive and share Google drive.

There are a lot of various tools, I prefer https://cyberduck.io/ because my blog is huge, for last 20 years I have thousands page and about 30 GB space (without various documentation).

Uploading working about full day.

4. Make local PostreSQL DB with description of file in Google Drive.

This was a separate project with Google APIMy workable Google Drive project template

Local copy of my blog structure allow me quick deploy project DB to Supabase (my current solution) OR (potentially) to other Cloud (from Firebase to Cloudflare R2).

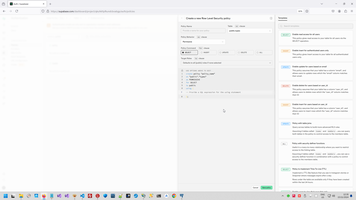

5. Upload project DB to Supabase and set up RLS policy.

This is separate project

6. Make core of this project - CTE function in Supabase.

I describe this task in separate post Recursive CTE Supabase function is key feature for built this blog.

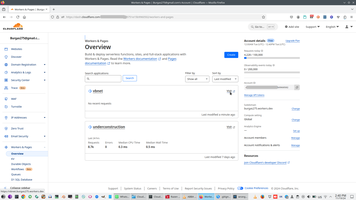

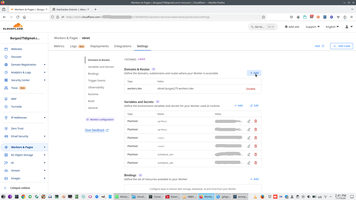

7. Make Clouflare worker.

First version without caching of this worker I have uploaded to Github https://github.com/Alex1998100/CloudflareAPI

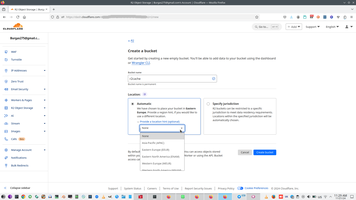

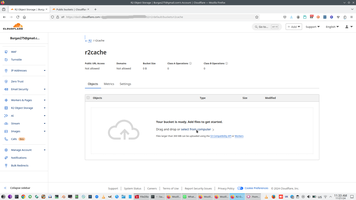

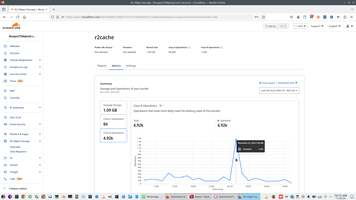

8. Make cache in Cloudflare R2.

Without caching my blog working slowly, therefore I decide to add Cloudflare R2 cache. This is links about Cloudflare R2 Bucket. And this additional information about Cloudfare Cache API and Cloudfare Cache limit

With Cache my blog working fine and faster.

Like ZeroTrust, Cloudflare R2 require bank card.

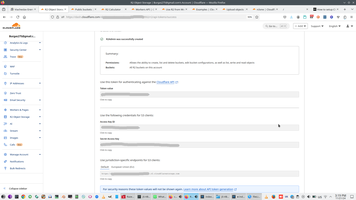

We need to create Bucket, than need to create APi token.

Than we using that token to upload data, because uploading from form has limitation.

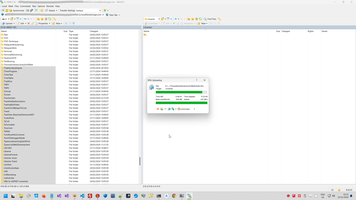

To upload data with Amazon S3 protocol, as usually, I used WinScp https://winscp.net/eng/index.php, this is also long time operation and need many hours to upload.

Cloudflare R2 boxing data with some additional attributes:

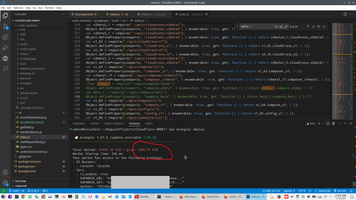

And Cache code looks extremely simple, this is just 7 code lines:

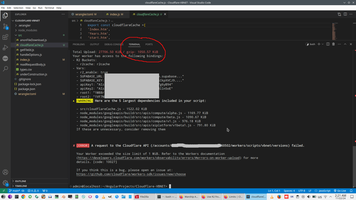

9. Reduce worker size.

Unfortunately, free worker plan has limits and more R2 limits, therefore attempt to deployment any real worker exceed these limits. Paid blog is not a choice for noncommercial blog like my blog, therefore next step is reduce JS size.

First candidate in my case was Google API, I have manually corrected Google API code.

10. Plugin to my CMS what simplify adding new page.

This project finished, but publishing this code means publishing all my CMS, this is not my goal now.

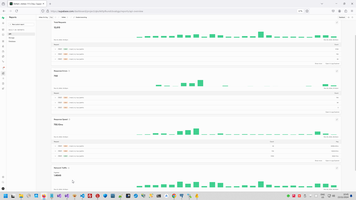

This project allow me automatically:

- adding new post to year section and to theme section

- make page footer and header,

- upload new page to Cloud,

- resize image,

- create new DB with new topic,

- create articles page

- make change in local PostgreSQL page

- upload changing to Supabase DB

- make new cache file list

- upload new cache list to worker project

- redeploy worker with new cache file list

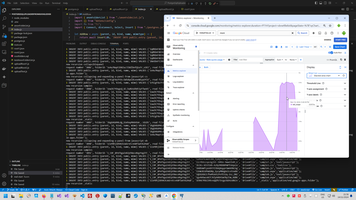

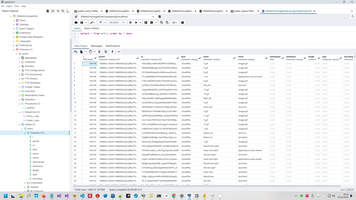

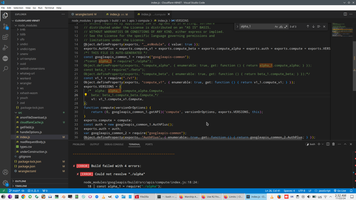

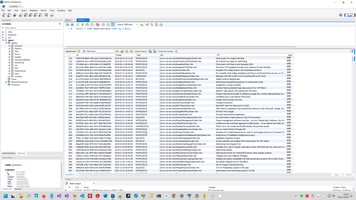

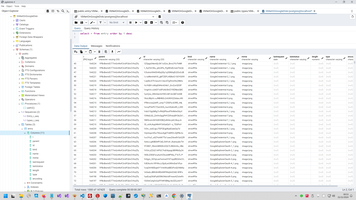

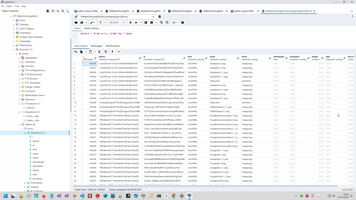

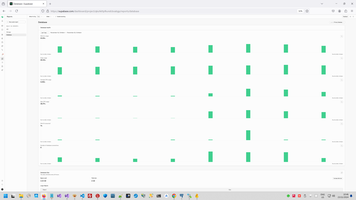

For example on the screen below you can see DB with about 1,000 main entering page in my blog. Articles page https://www.vb-net.com/Articles/Index.htm, for example, built automatically with this DB

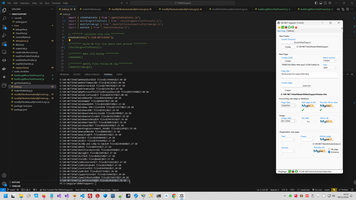

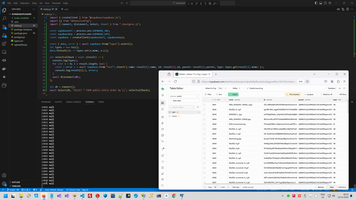

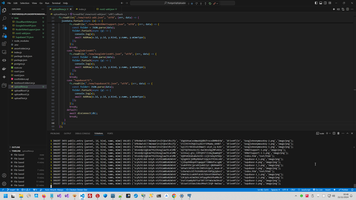

And, for example, I decide to renew a couple screens in my last post, you can see some my code and how additional rows adding to my local PostgreSQL db, related with this blog.

That's it about last part of my CMS to support my blog in new reincarnation with additional features:

- Supabase

- PostgreSQL

- Google API

- Google Disk

- Cloudflare Worker

- CloudflareR2

- WinScp

- Cyberduck

instead my old CMS which working with Cloudflare ZeroTrust.

This is look little bit complex comparing my old CMS vestion, but my blog still exposing internet absolutely free. And because this is very convenient environment and tools in programming my last years, I made this complete project very fast, less than one week.

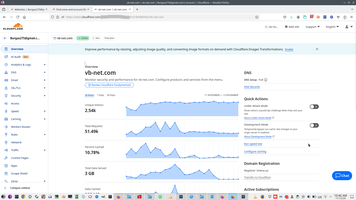

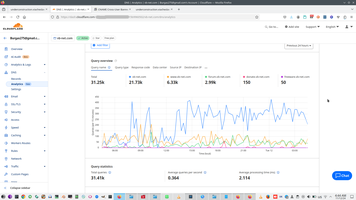

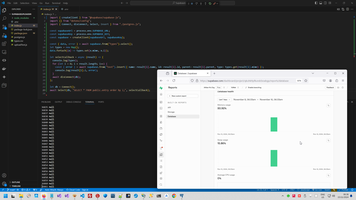

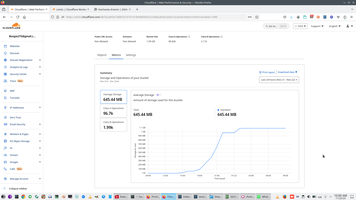

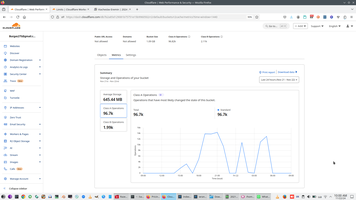

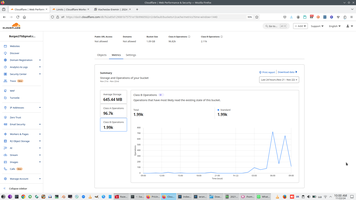

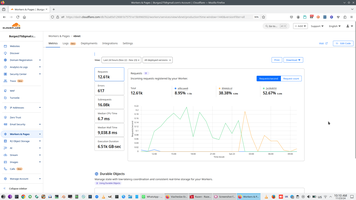

11. Workable project statistics.

So, now my project still exposed on internet absolutely free.

And that's it about this my project at all - "Moving my blog from Cloudflare ZeroTrust to Cloudflare R2".

Related page:

Cloudflare context:

JS project context:

Front context:

Google context:

)

)

|

|