Parse CSV with Node

1. What I need.

I have a lot of pages to processing CSV in .NET environment Office, including parsing extremely large CSV files. But how too working with CSV files in NODE.JS environment?

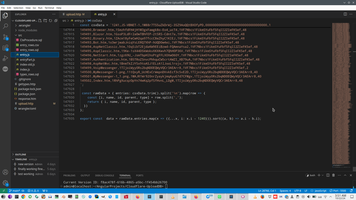

This is example of my CSV https://github.com/Alex1998100/CloudflareD1/blob/main/src/entryPart.js - question is how to parse it to JS object? And usually we need to start not from first row.

Of course, if you have a file system, you need read firstly CSV from filesystem, something like this:

1: fetch('/csv/chunk1.csv')

2: .then(response => response.text())

3: .then(csvChunk => processCSV(csvChunk));

4:

5: // Load next chunk when user scrolls to the bottom

6: window.addEventListener('scroll', () => {

7: if (isNearBottom()) {

8: fetch('/csv/chunk2.csv') // Or determine the correct chunk number

9: .then(...)

10: .then(csvChunk => appendCSVData(csvChunk)); // Append to existing data

11: }

12: });

But this is Cloudflare Worker code, and we have no filesystem.

Therefore we need to define CSV something like this:

1: const csvData = `1241,JS-VBNET-1,1M88r7TS5uZkDrWj-3SZ9AoQQtBXOfyPD,000000000000000000000000000000000,1

2: 1242,JS-VBNET-2,1VF7Nbcs1FikmSYuFbf5FqII2ZIeFK5ef,000000000000000000000000000000000,1

3: ...

4: 149502,Index.htm,1RhPgXucqzOpYn74wKq2pfSfHvnL_LDgM,1TIjoiWyy0Rs2bqNDOEQWyVQCr3AEArr8,48

5: `;

6:

7: const rawData = { entries: csvData.trim().split('\n').map(row => {

8: const [i, name, id, parent, type] = row.split(',');

9: return { i, name, id, parent, type };

10: })

11: };

12:

13: export const data = rawData.entries.map(x => ({...x, i: x.i - 1240})).sort((a, b) => a.i - b.i);

2. My first Sync solution.

There are a couple way to make this task. First, most idiotic way is my first synchronous uploader, something like this:

1:

2: import {data} from "./entry.js"

3:

4: const endID = pathname.split("/")[2];

5: //console.log(endID);

6: let startUploading = false;

7: for (const x of data) {

8: //console.log(x.id, x.id == endID);

9: if (x.id == endID) {

10: //console.log(x.id, x.id == endID);

11: startUploading = true;

12: continue; // This will now work correctly

13: }

14: if (startUploading) {

15: console.log(`Uploading started next records after ${endID}`);

16: const sqlQuery = env.MY_TOPIC.prepare(

17: "INSERT INTO entry (i, name,id,parent,type) values (?,?,?,?,?)"

18: ).bind(x.i, x.name, x.id, x.parent, x.type);

19: try {

20: const { success } = await sqlQuery.run();

21: if (success) {

22: //console.log(x.i);

23: } else {

24: console.log(`Error ${x.i}`);

25: }

26: } catch (error) {

27: console.log(`${pathname} - ${error}`);

28: }

29: }

30: }

31: return Response.json("ok");

3. My Async solution.

In next step I changed my solution to Asynchronous way with Promise.all and replaced for (const x of data) { to Slicing the Array:

1: import { data } from "./entry.js";

2:

3: const endID = pathname.split("/")[2];

4: if (!endID) {

5: return new Response("No ID specified", { status: 400 });

6: }

7: try {

8: await uploadData(data, endID);

9: return new Response("ok");

10: } catch (error) {

11: console.error("Upload failed:", error);

12: }

13: return new Response.json("ok");

14:

15:

16: async function uploadData(data, endID) {

17: //Slicing the Array: data.slice is used to reduce the work done, including only the records after the endID record.

18: const recordsToInsert = data.slice(data.findIndex(x => x.id === endID) + 1)

19: // This executes all the database insertion Promises concurrently, significantly speeding up the process,

20: return Promise.all(recordsToInsert.map(async x => {

21: const sqlQuery = env.MY_TOPIC.prepare(

22: "INSERT INTO entry (i, name, id, parent, type) values (?,?,?,?,?)"

23: ).bind(x.i, x.name, x.id, x.parent, x.type);

24: try {

25: const { success } = await sqlQuery.run();

26: if (!success) {

27: console.log(`Error inserting record: ${JSON.stringify(x)}`);

28: }

29: } catch (error) {

30: console.error(`Error inserting record: ${JSON.stringify(x)}, error: ${error}`);

31: }

32: }));

33: }

4. Papa Parser

But this is not final solution! This is just only first step to deep dive to CSV processing in Javascript.

There are special library to working with large CSV files - https://www.papaparse.com/ Papa Parser allow to reach ultimate performance by combining Lazy Loading and Streaming Parsing.

For the ultimate performance, you can even combine both techniques. You can lazy load CSV chunks from the server and then stream-parse each chunk individually. This minimizes network requests, memory usage, and improves perceived performance. However, this combination relies on allowing network requests which you previously stated as something you wanted to avoid. If network requests are strictly prohibited, then embedding the entire file as a string and stream parsing locally is the only possible solution. A bundler should be used to inject the data and keep the actual code itself clean.

- • Ease of use: Its simple API makes parsing CSVs very straightforward, even for complex files.

- • Flexibility: It can handle many CSV variations, such as different delimiters (not just commas), different quote characters, and different line endings.

- • Header row support: It can automatically detect and use the header row to create objects with named properties.

- • Streaming: For very large CSV files, it supports streaming parsing, which processes the data in chunks, preventing the browser from becoming unresponsive due to excessive memory usage. This is essential for performance with large datasets.

- • Web Workers (browser): Papa Parse supports using Web Workers to parse CSV data in the background, preventing the parsing process from blocking the main thread, leading to a smoother user experience.

- • Error handling: It provides robust error handling to catch and report issues during parsing.

- • Auto-detection: It automatically detects delimiters, quotes, and other CSV parameters if they aren't specified.

- • Transformations: It allows you to apply custom transformations to the parsed data.

And this is example:

1: // Basic parsing to an array of arrays

2: Papa.parse(csvString, {

3: complete: function(results) {

4: console.log("Finished:", results.data); // Array of arrays

5: }

6: });

7: // Parsing to an array of objects using the header row

8: Papa.parse(csvString, {

9: header: true, // Use the first row as the header

10: dynamicTyping: true, // Attempt to convert strings to their numeric/boolean equivalents.

11: complete: function(results) {

12: console.log("Finished:", results.data); // Array of objects

13: }

14: });

15: // Streaming Example

16: Papa.parse(csvString, {

17: header: true,

18: chunkSize: 1024 * 1024 * 50, // Process in 50MB chunks (adjust as needed)

19: chunk: function(results, parser) {

20: // Process each 50MB chunk here:

21: console.log("Chunk:", results.data)

22: },

23: complete: function() {

24: console.log("All chunks processed!")

25: }

26: });

NodeBackend context:

)

)

|

|